LLMs are somewhat of a bullshit generators

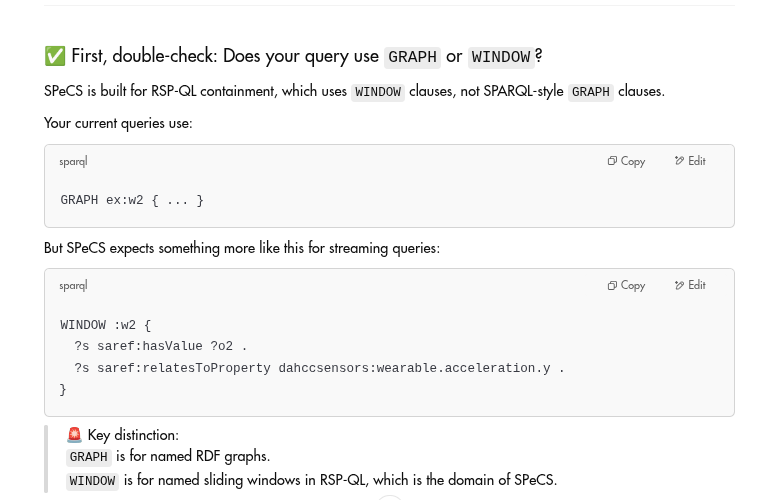

In the image above, I was using chatgpt to debug a code I had for query containment. Since there was an issue with the containment solver, I asked it to help me debug the code. It gave me a solution that was completely off the mark, and I had to explain to it that it was not even close to what I was asking for.

For example. SPeCS is a containment solver, but for SPARQL queries and not for RSP-QL queries. In my work, I am trying to extend it but it does not know that. It is just trying to predict what the issue might be, and said that SPeCS is a containment solver for RSP-QL queries, which is not true.

It seems that LLMs, like the one I was using (chatgpt), are not very good at understanding specific domains or contexts.